Key Elements of the 20-Point Peace Proposal for Ukraine

A Gazan Girl’s Battle Against Severe Hunger

Libya’nın Genelkurmay Başkanı ve 4 Kişi Türkiye’de Uçak Kazasında Hayatını Kaybetti

I Remade Google’s Ad with My Child’s Stuffed Animal, and Now I Regret It

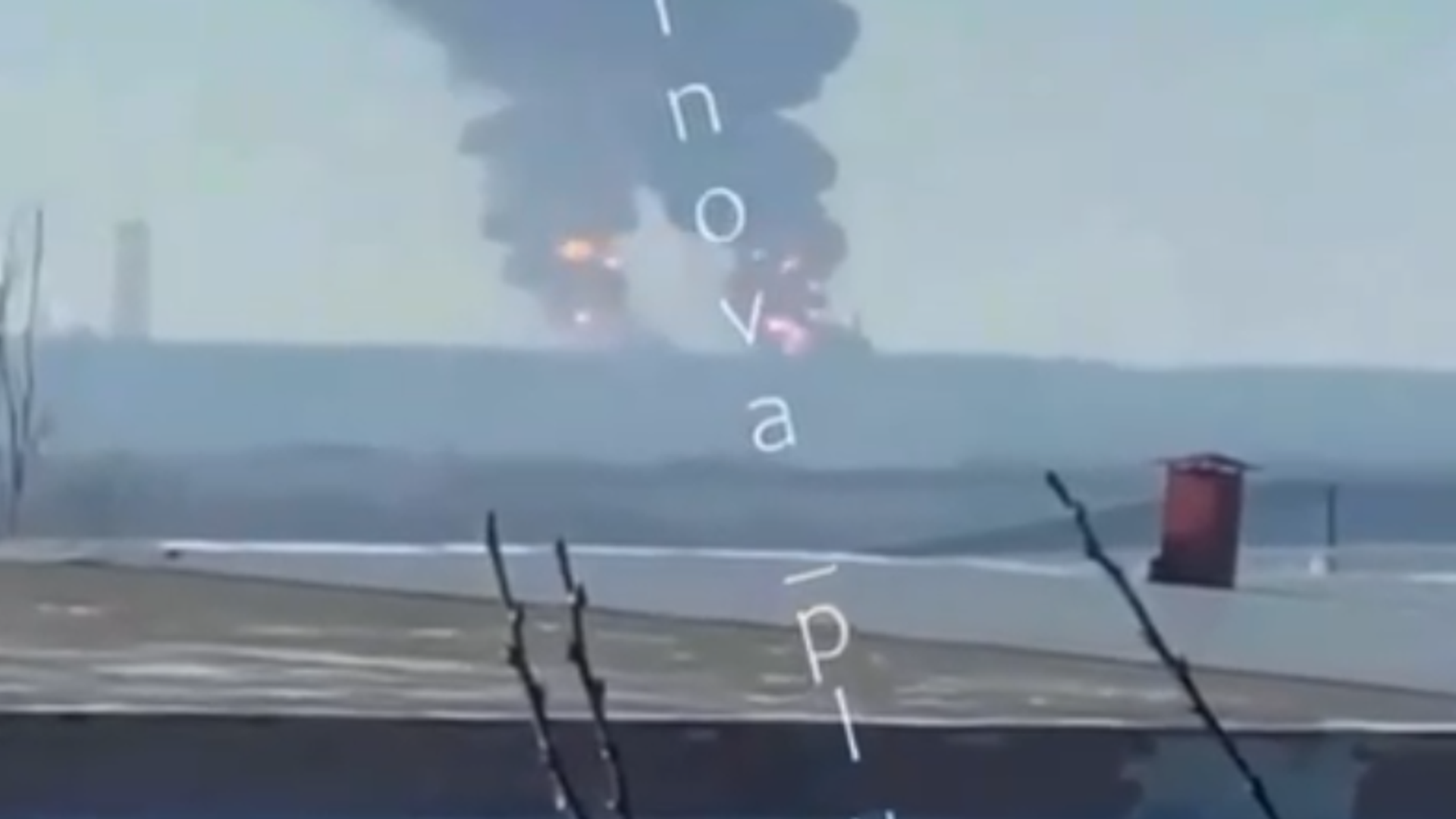

Ukraine Strikes Key Russian Oil Refinery Using British Missiles, Officials Report

From Margins to Dominance: The Transformation of India’s Hindu Right

Efforts to Combat Online Hate Face U.S. Censorship Claims

Father-Son Duo Launches $108 Billion Hostile Takeover of Warner Bros. Discovery

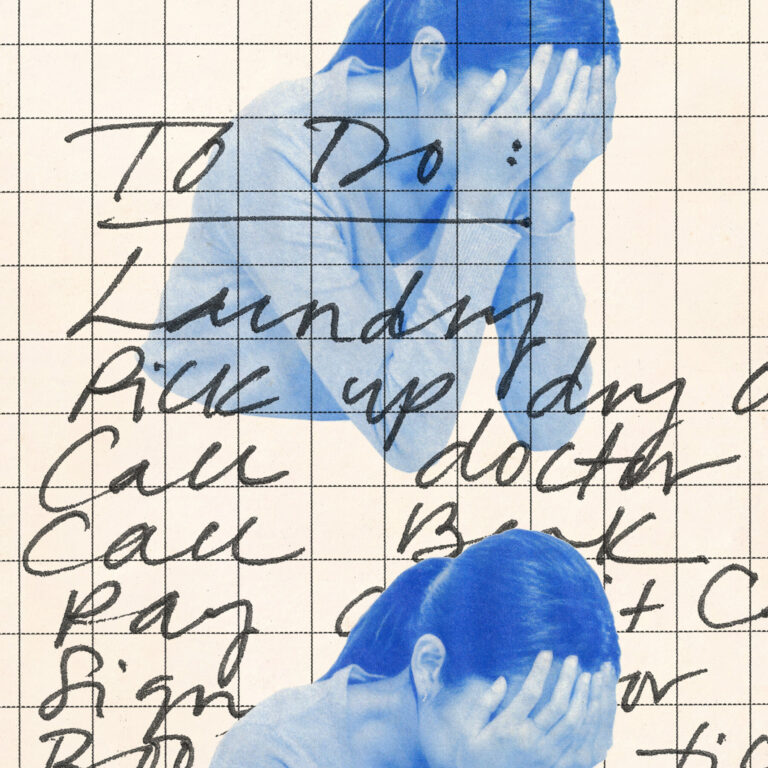

Court Affirms Government’s Right to Cut Funding for Planned Parenthood